Hi,

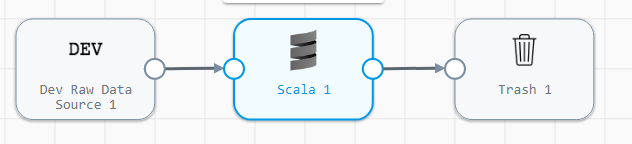

I am trying to read from the mongo db. I have set as below in the scala code for the below pipeline:

val df=spark.read.format("com.mongodb.spark.sql.DefaultSource").option( "spark.mongodb.input.uri", "mongodb://mu:mp@mh:port/md.cname?authSource=mad&readPreference=primary&ssl=false").load()

where the abbreviations stand for

mad - mongodb.authentication-database

mu - mongodb.username

mh - mongodb.host

md - mongodb.database

cname - collection name

mp - mongodb.password

Error:

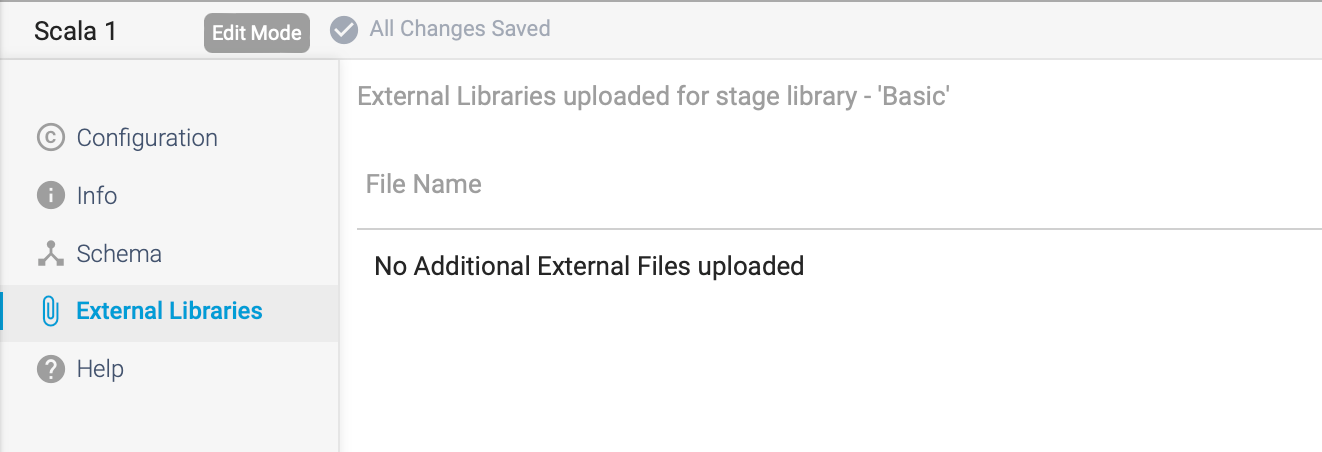

TRANSFORMER_00 - TRANSFORMER_00 - Failed at operator: Operator: [Type: class com.streamsets.pipeline.spark.transform.scala.ScalaTransform id: Scala_01]: Class: Failed to find data source: com.mongodb.spark.sql.DefaultSource. Please find packages at http://spark.apache.org/third-party-projects.html was not found. This usually happens when running on an unsupported version of Spark, The offsets for failure occurred was 'Unknown'