While trying to inject XML data from S3 into snowflake, facing the below error :

S3_SPOOLDIR_01 - Failed to process object 'UBO/GSRL_Sample_XML.xml' at position '0': com.streamsets.pipeline.stage.origin.s3.BadSpoolObjectException: com.streamsets.pipeline.api.service.dataformats.DataParserException: XML_PARSER_02 - XML object exceeded maximum length: readerId 'com.dnb.asc.stream-sets.us-west-2.poc/UBO/GSRL_Sample_XML.xml', offset '0', maximum length '2147483647'

Size of the XML file is 4MB

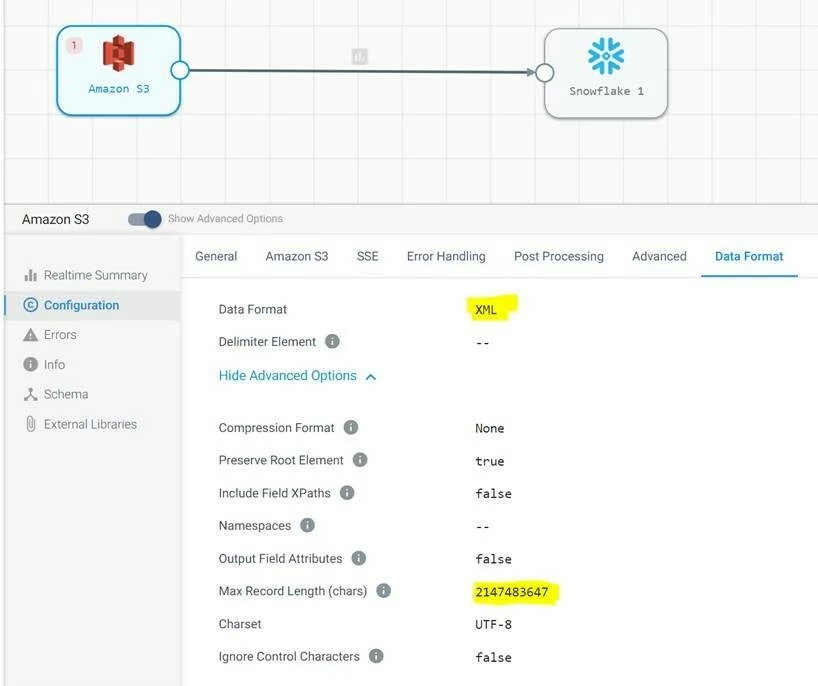

The properties used for Amazon S3 component has been attached.

Also, Increased the Max Record Length size to its max.

S3 Properties- Max Record Length size : 2147483647 Data Format : XML

Can you Please suggest on this. Is there any size related constraint associated?

We have successfully loaded smaller files from S3 to Snowflake.

Best answer by Dash

View original