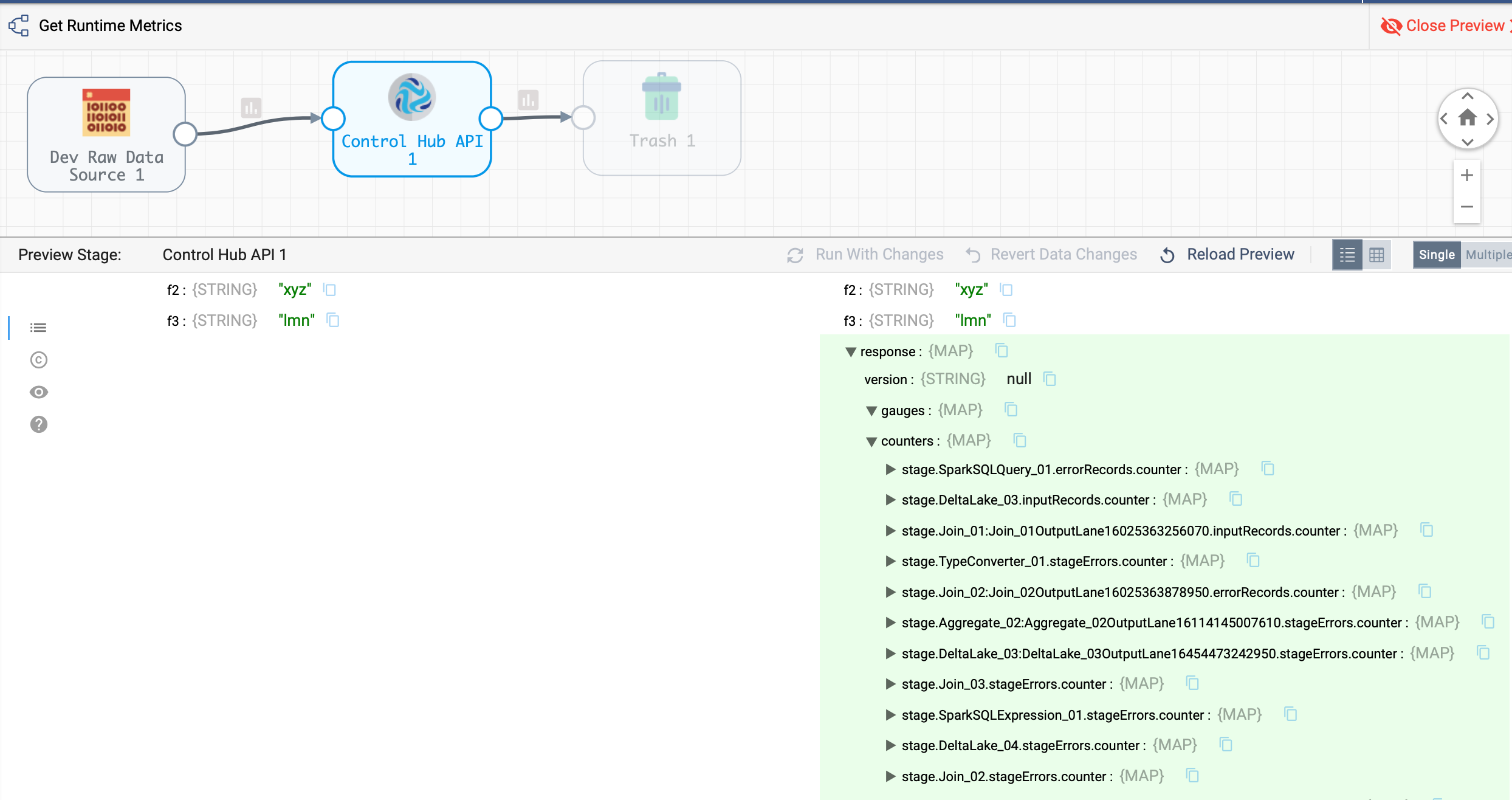

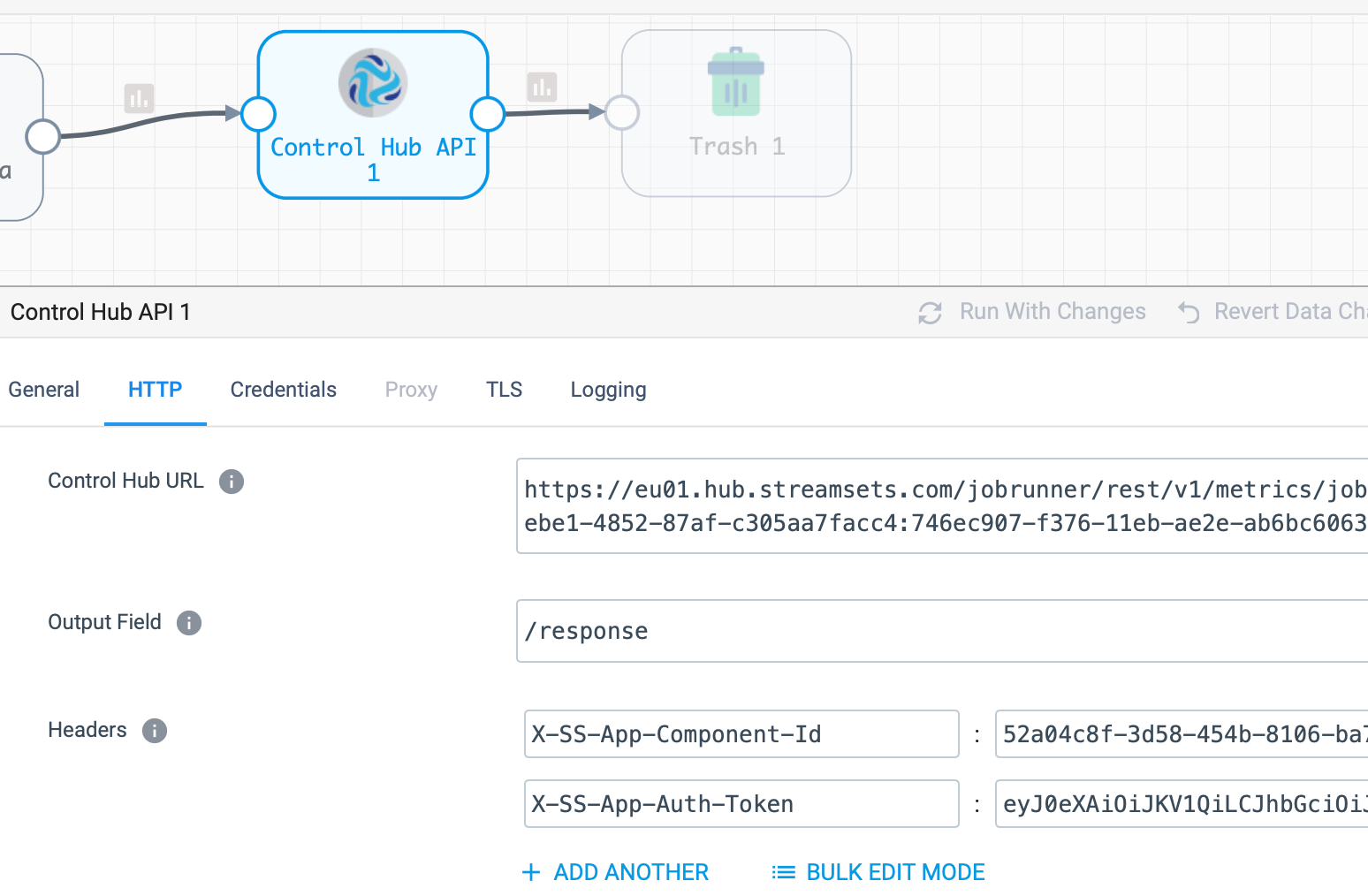

we are ingesting data using CDC pipelines(streamsets) from oracle source. so basically it ingests if any INSERT/UPDATE/DELETES are happening at source. Just wanted to how how can audit be performed on the same and its 24*7 and what can be the logic for audit checks.

we are basically using databricks for audit checks