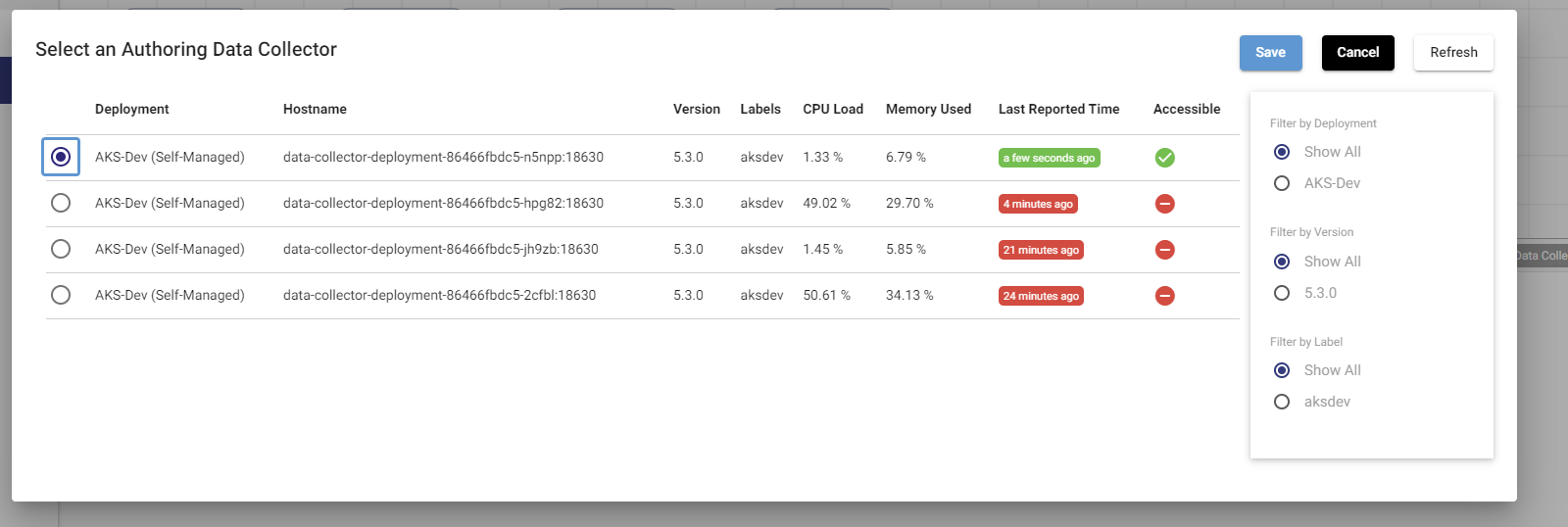

I am using Azure Kubernetes Cluster (AKS) and have deployed a docker image having tarball deployment script. When an error happens in the control hub’s pipeline a new pod is created in AKS and also an engine instance is created but the pipeline does not update its engine instance to an accessible one automatically. Is there any automated workaround for this use case. Your comments would be appreciated.