Hi,

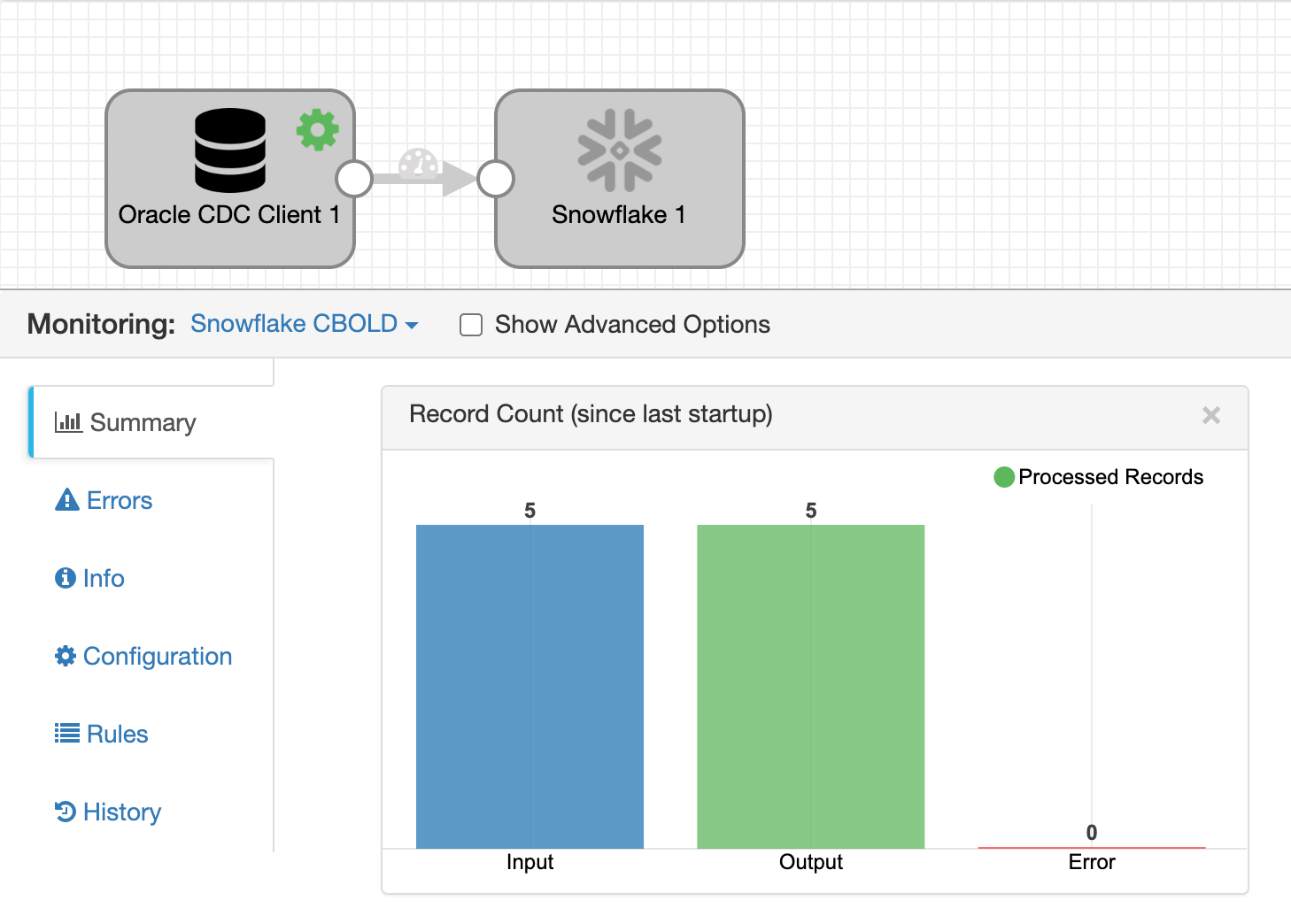

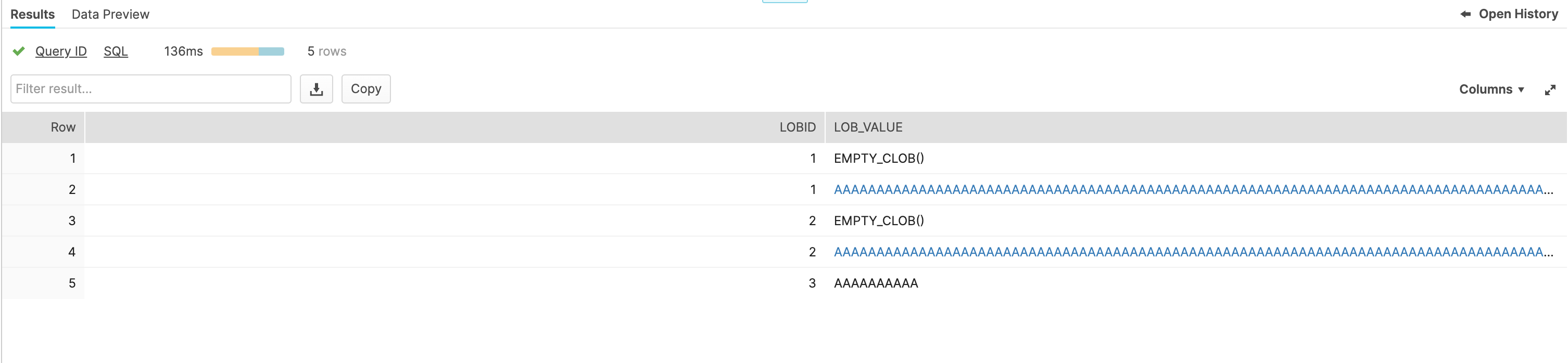

Facing error in Data collector pipeline Oracle CDC to Snowflake while processing CLOB datatype table. When inserting value >= 4000 then works fine. But the less than that facing error.

SNOWFLAKE_28 - Snowflake MERGE for 'sdc-df26c7ce-fd00-4b5d-a3b1-817b9809e0a3.csv.gz' processed '1' CDC records out of '3'

Oracle:

create table TEST_LOB_VAL(lobid number(10) primary key, lob_value clob );

INSERT INTO TEST_LOB_VAL VALUES (1,to_clob(RPAD('AAAAAAAAAAAAAAAAAA',4000,'A')));

INSERT INTO TEST_LOB_VAL VALUES (2,to_clob(RPAD('AAAAAAAAAAAAAAAAAA',2000,'A')));

INSERT INTO TEST_LOB_VAL VALUES (3,'AAAAAAAAAA');

COMMIT;