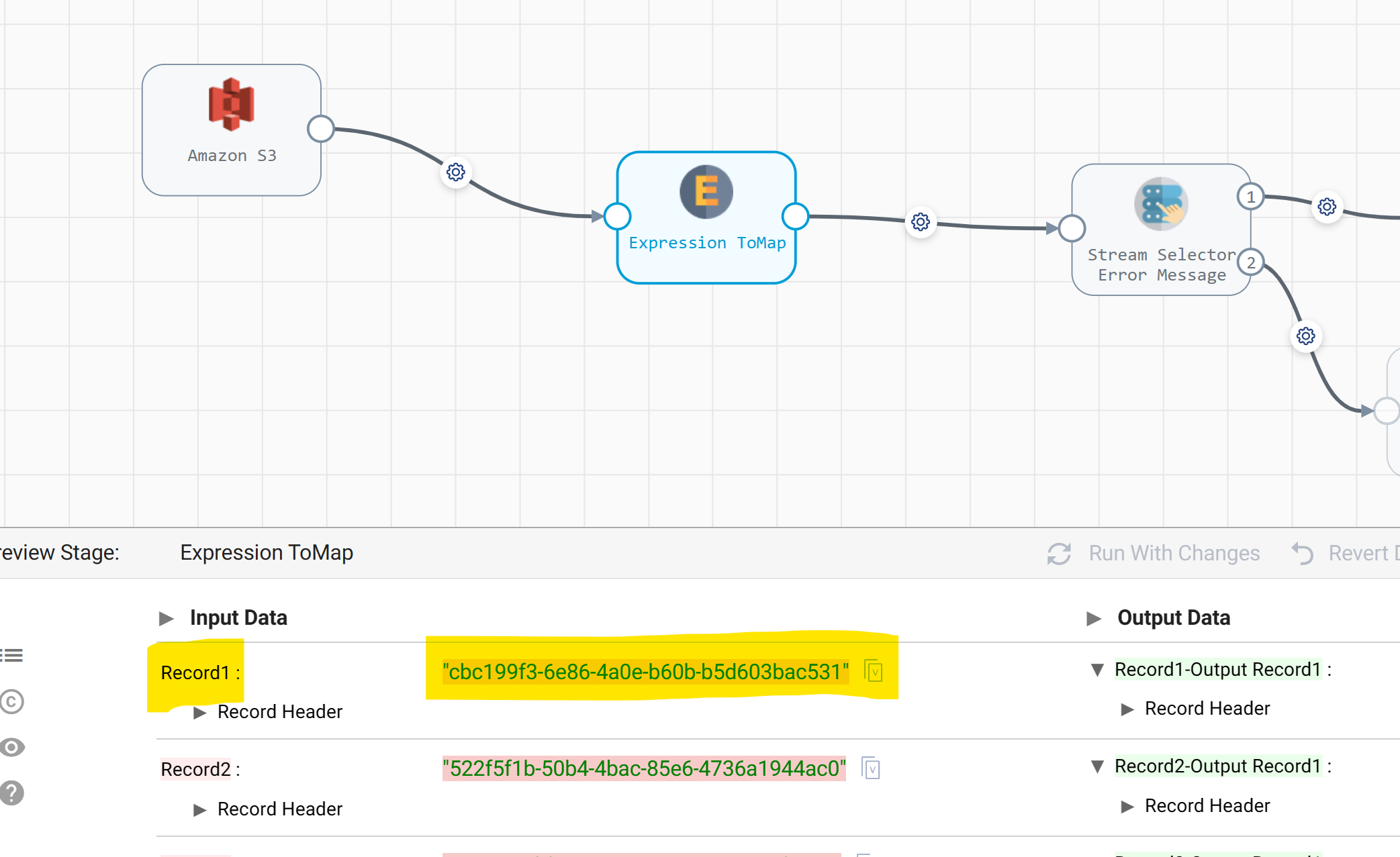

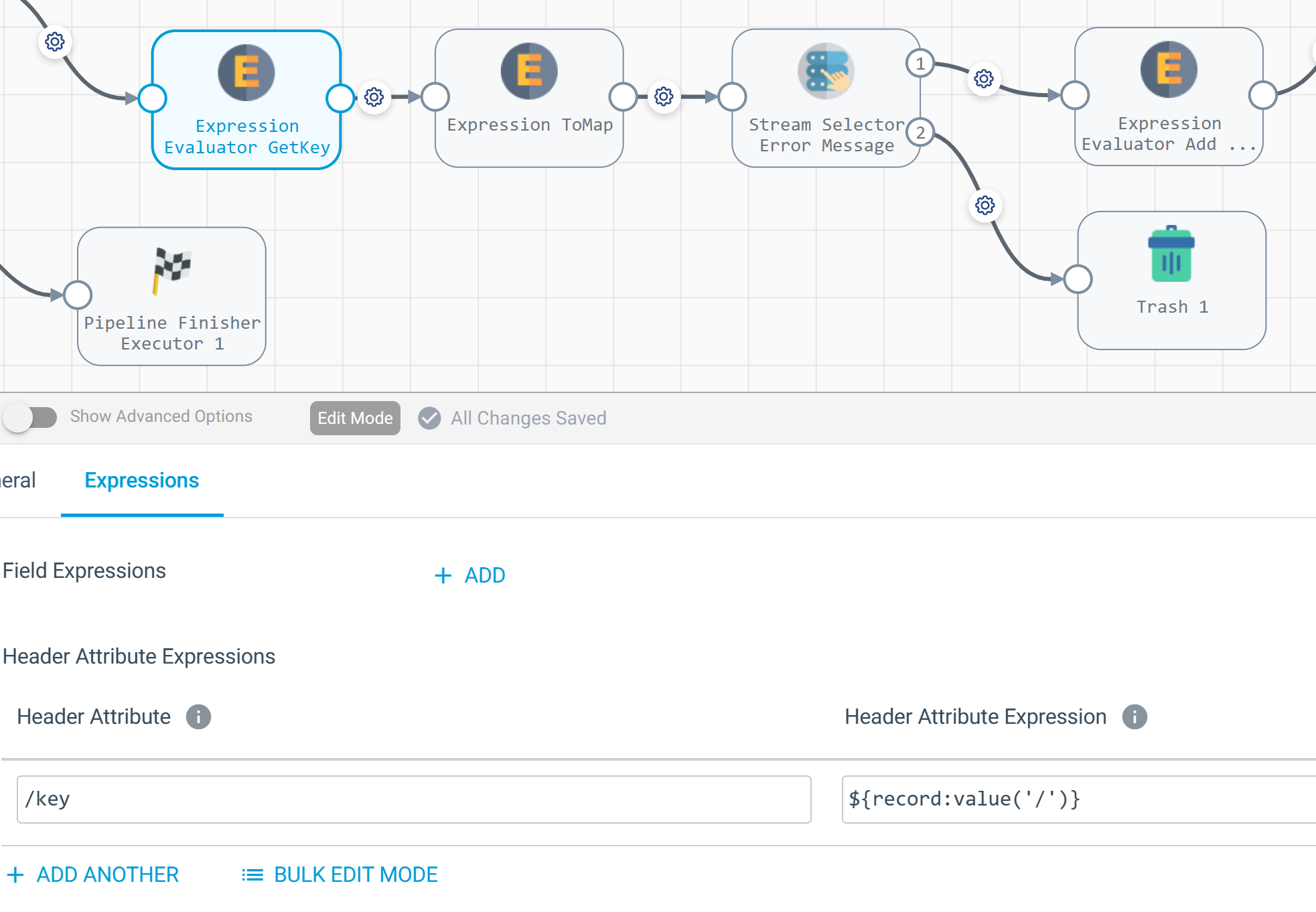

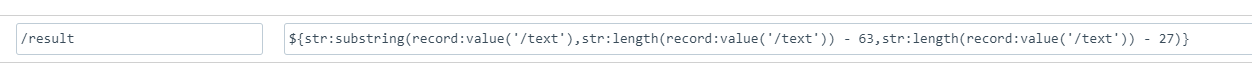

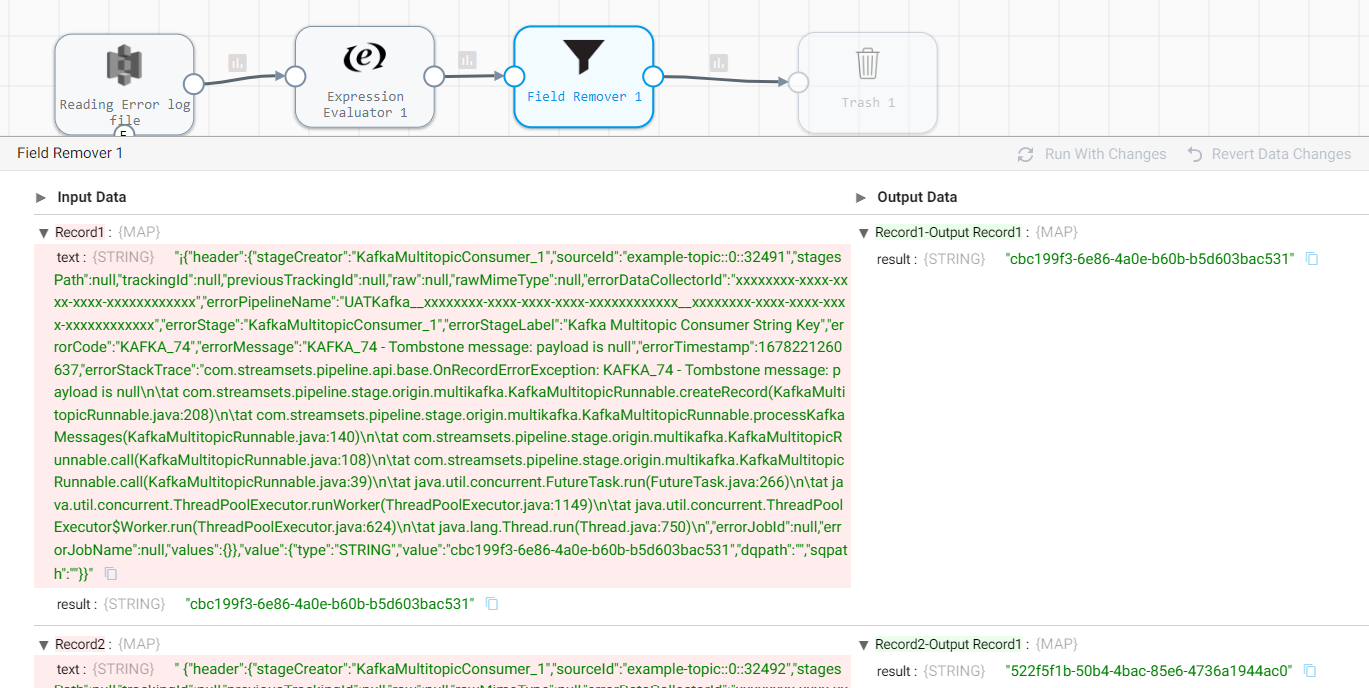

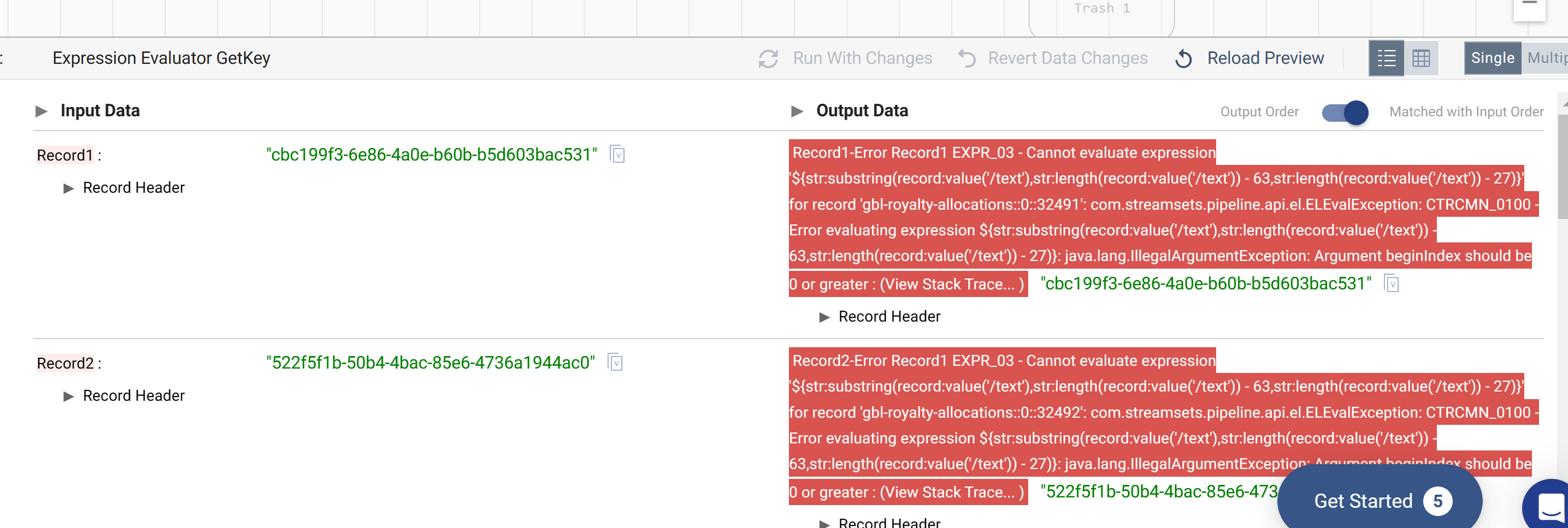

I have a pipeline that reads Kafka records and I’m writing any errors to an S3 bucket. Most of the errors are related to tombstone records. Once my original pipeline runs, I plan on having another pipeline process the error files on S3. So far so good. I can get the error code from the SDC file in the record:errorCode() field. I also need the Kafka key from the S3 file. What is the highlighted record/field called and how do I obtain the value with an expression evaluator or other processor? Once I convert it to a map, that value is gone.