Hi there,

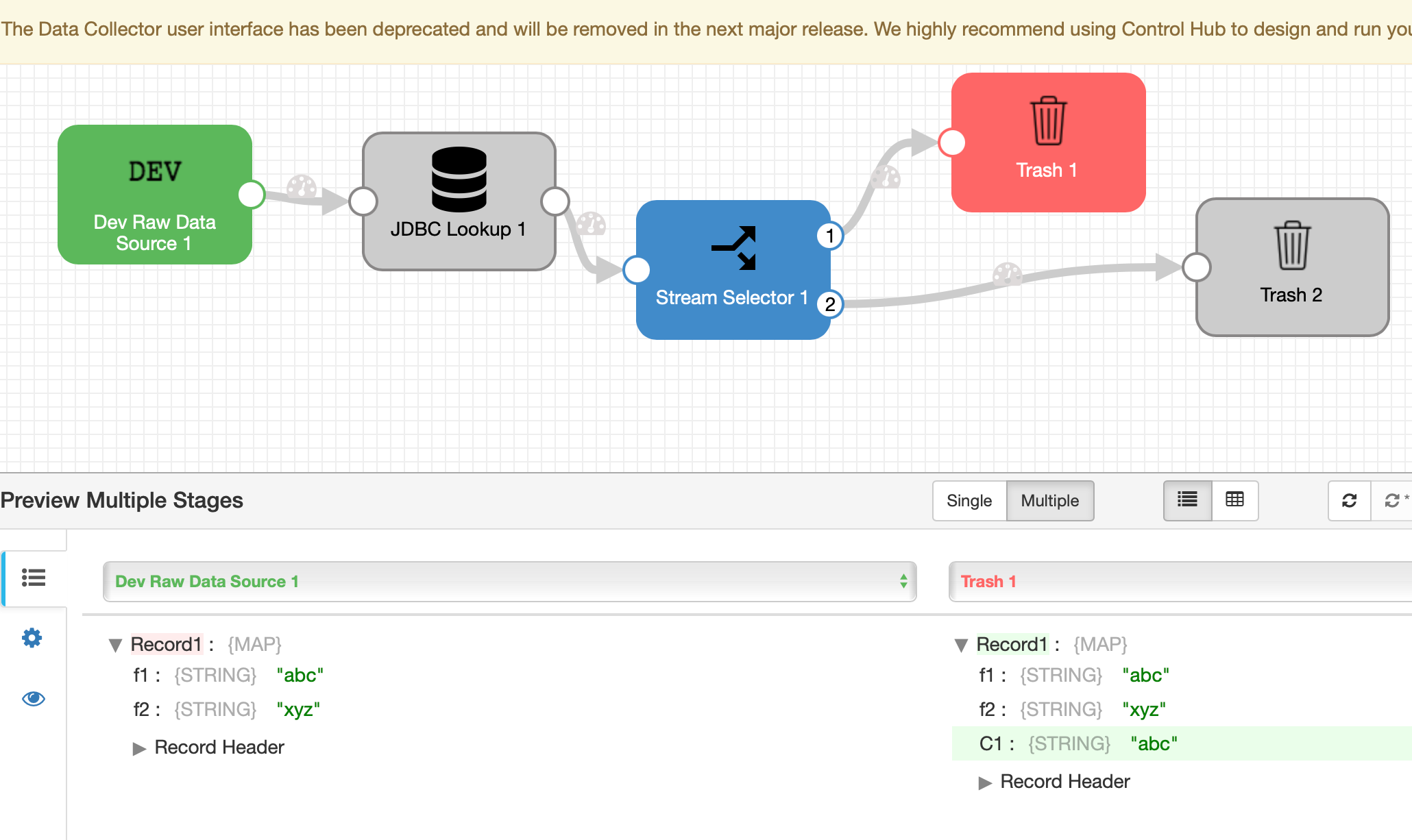

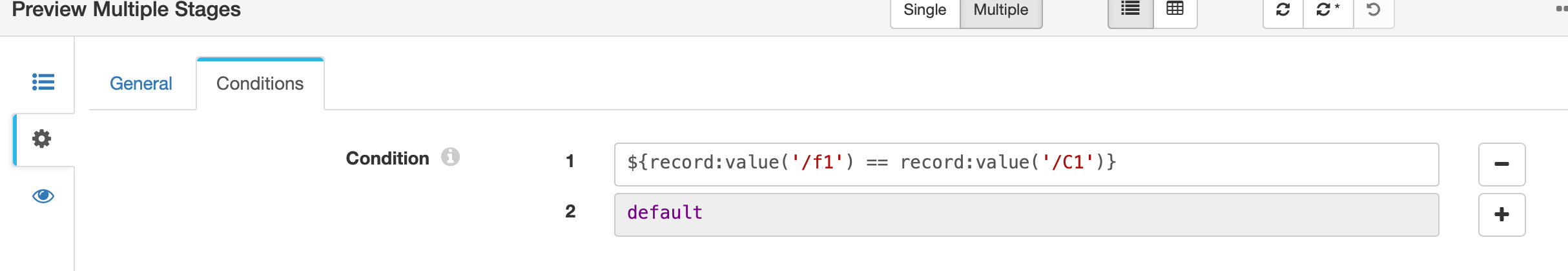

I have a requirement to read data from Snowflake DB and then use a specific column value as a filter condition for reading from a SQL Server DB. Has anyone dealt with such design in a data collector pipeline?

I am stuck, since I can ONLY read from one source at a time using the JDBC Query Origin and I don’t think, I can pass values of one query as a parameter to another.

Example:

Get the max date of a column from a Snowflake table and use that date value as a filter condition for a SQL Server table.

Thanks,

Srini