Hi there,

We are migrating to DataOps Platform.

We run a Self-Managed Environment.

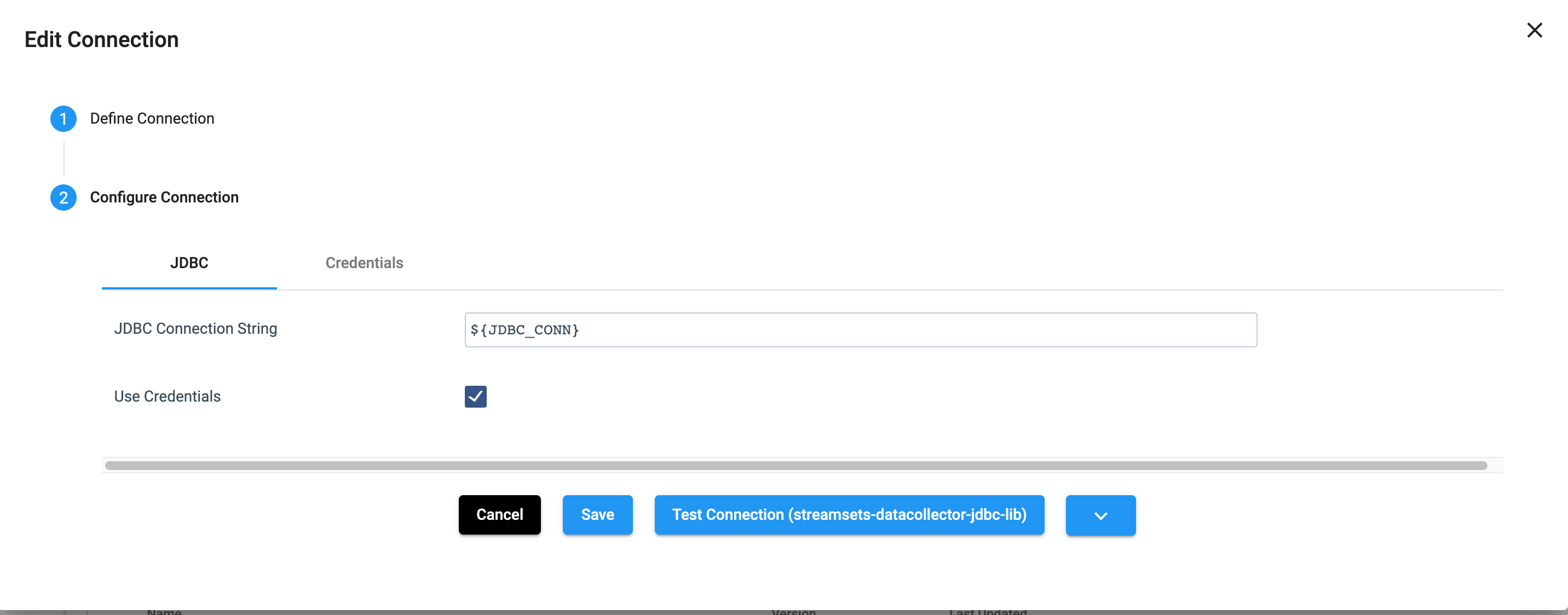

We use connection objects for our pipelines and our credential store is AWS.

Our structure is such that, ONLY Operations team can deploy pipelines to PROD deployment / engines.

We are new to using the DataOps Platform, we noticed that we could NOT change the connection to point to PROD sources at the time of creation of a job instance from an already tested pipeline.

Our “Operations” team had to create a new version of the pipeline pointing to PROD connections and ONLY then they can create a Job instance and deploy. Note - The PROD connections are ONLY accessible by our Operations team.

It would have been nice, if we have the option to choose the values for the connection (objects) at the point of job instance creation.

Please advise if there are any other alternatives.

P.S. I understand I can always switch to runtime parameters to meet my requirements but then I will have to completely ignore the concept of Connection Objects.

Thanks,