Hi there,

Recently, all our Job Instances in the DataOps Platform did not execute due to the following,

JOBRUNNER_73 - Insufficient resources to run job. All matching Data Collectors '[https://XXXXXX:18600]' have reached their maximum memory limits.

We understand this is because, memory utilization on the engine was more than 80% and Garbage Collection process did not kick-in.

We are using Data Collector v4.3; we use Tarball installation type and AWS EC2 instance. We had allocated enough memory for the few job instances that are scheduled to run; the data volume for each job instance was less than 5000 records.

We noticed the issue only after 12+ hours since the Job Instance or the pipeline did not fail,

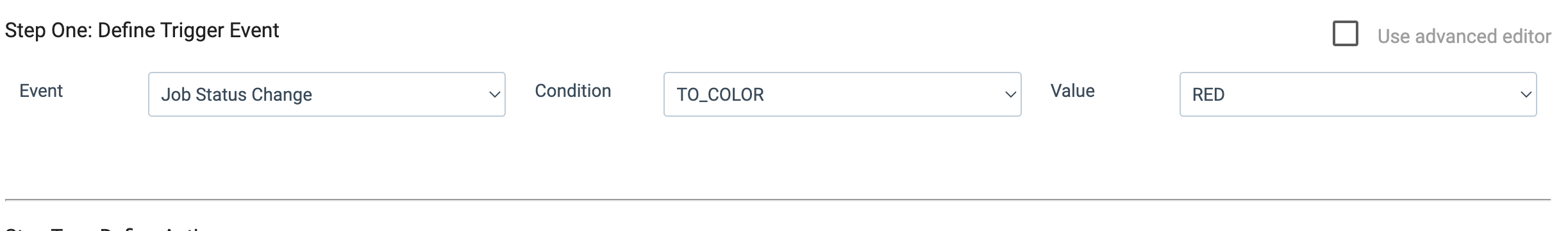

The platform is to released to the wider team and how do we get an alert when,

- A Job instance did not execute in-time. Note - it did not have any errors - it was just in an ACTIVE state but color-coded in RED with the above message. The message was NOT even showing as ERROR.

- How do we set an alert to monitor the memory utilization on the Data Collector engine in DataOps Platform.

- Do we need to do any extra tweaking for the Garbage Collection Process ?

NOTE:

- I has fail over “enabled” for all job instances. But we did not have a “failover” engine set-up. Our deployment just has 1 engine running.

- The maximum number of retries and the global retries was set to -1

Can someone confirm, whether this is the reason the JOB INSTANCE was NOT marked as FAILED and an alert NOT sent out?

Thanks,

Srini