Hello,

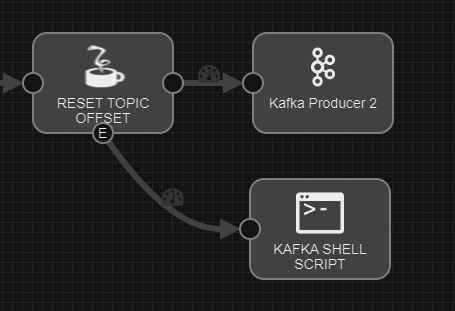

I'm currently working on a simple pipeline to ingest kafka messages inside a log file.

I'm trying to consume all the data from the beginning of a topic but i'm only getting newer data added to this topic.

Once consumed, the previous topic messages are not accessible anymore.

I've already test all the different "Auto Offset Reset" properties.

Same for simple and multi topic consumers.

In the official documentation docs.streamsets.com :auto.commit.interval.ms bootstrap.servers enable.auto.commit group.id max.poll.records

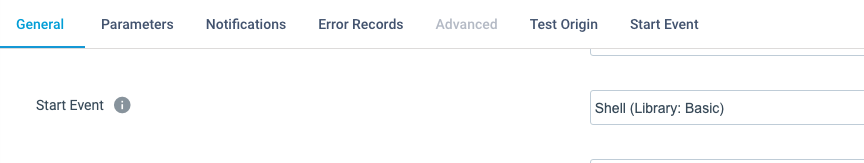

If I understand correctly all those parameters are locked so I can't disable the offset management and process all the data from the beginning of a topic.

Is there an additionnal Kafka configuration property to use or do I need to configure the topic directly via kafka CLI ??

StreamSets Data Collector version : 3.14.0

Kafka Consumer version : 2.0.0

Regards.