Hi

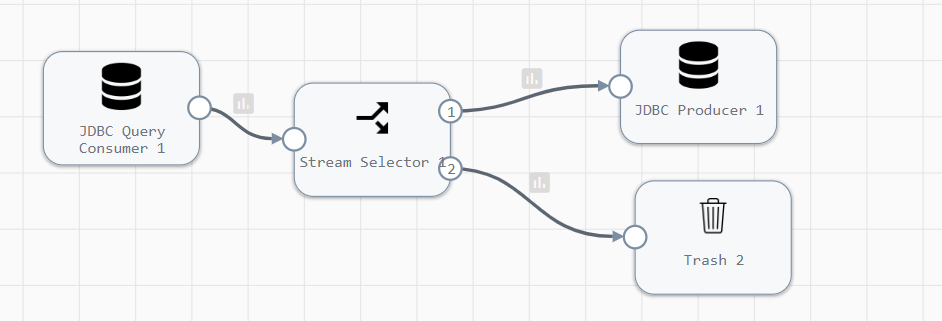

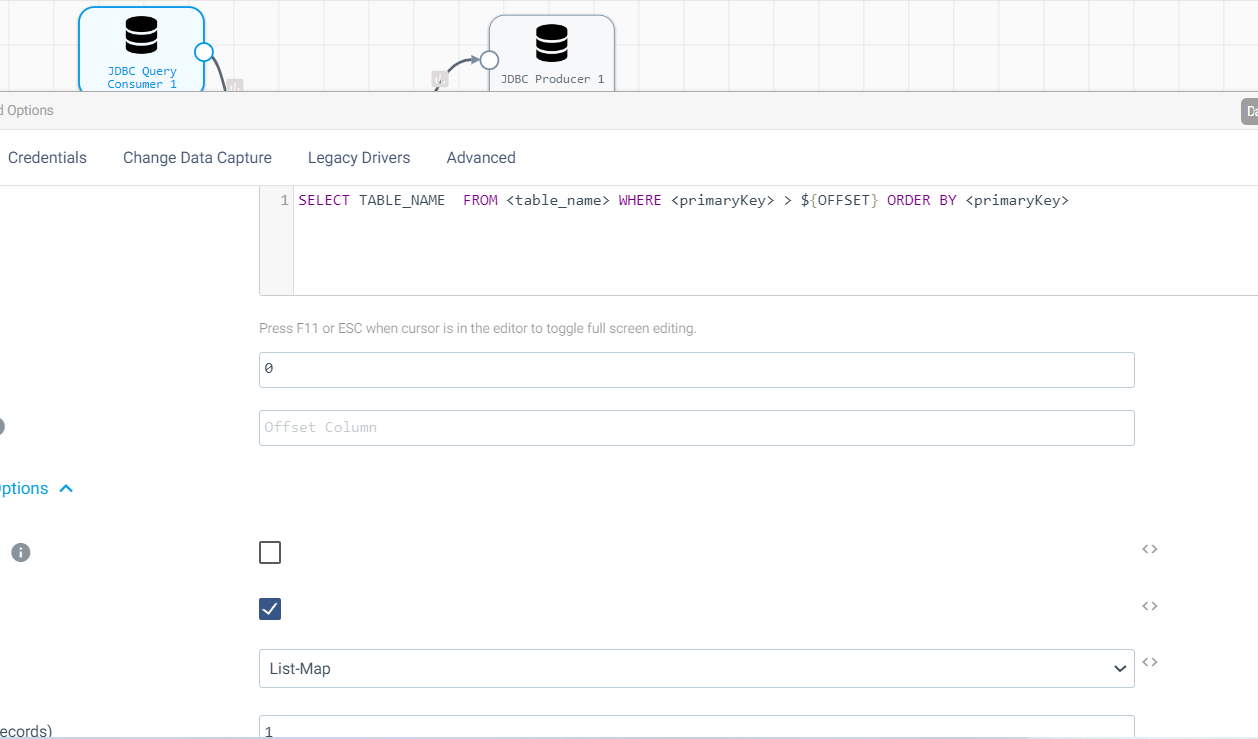

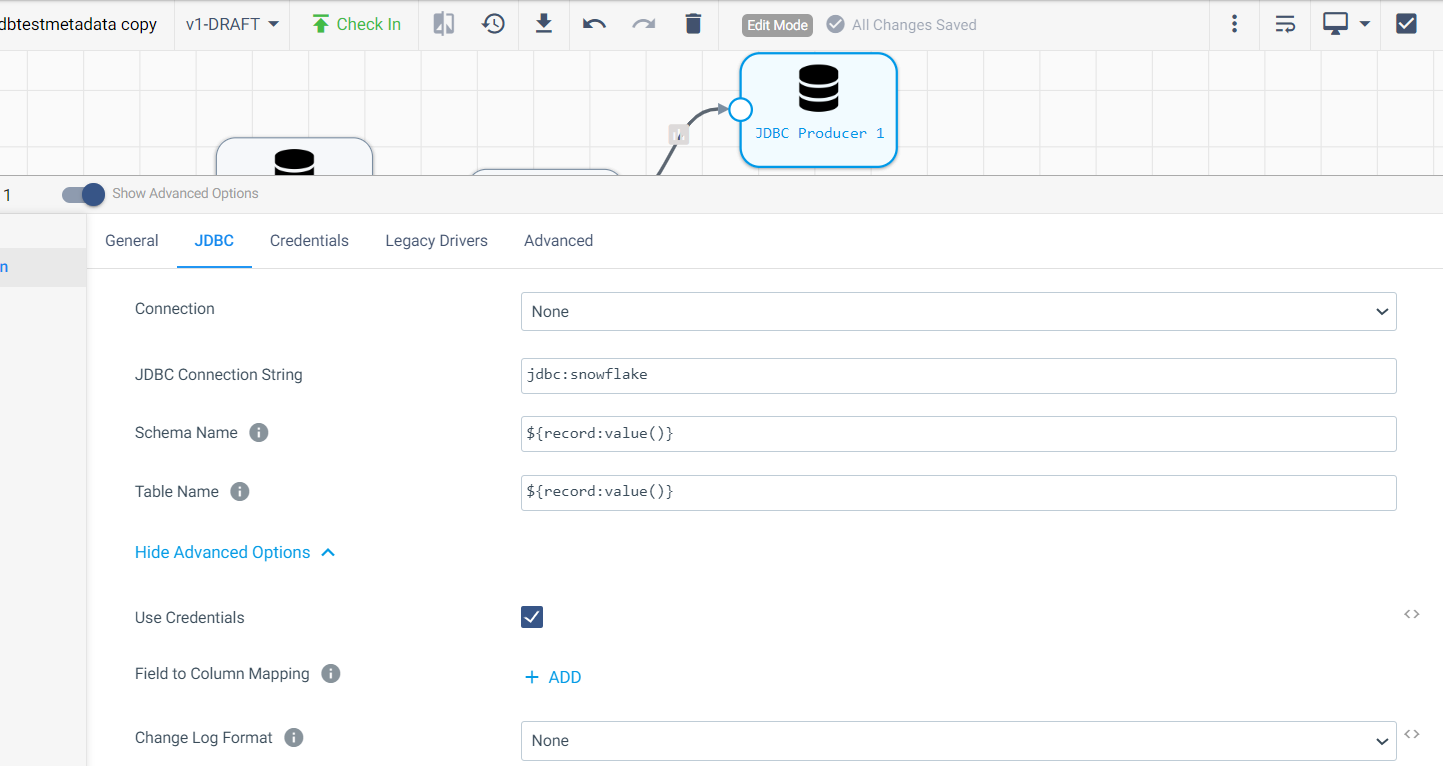

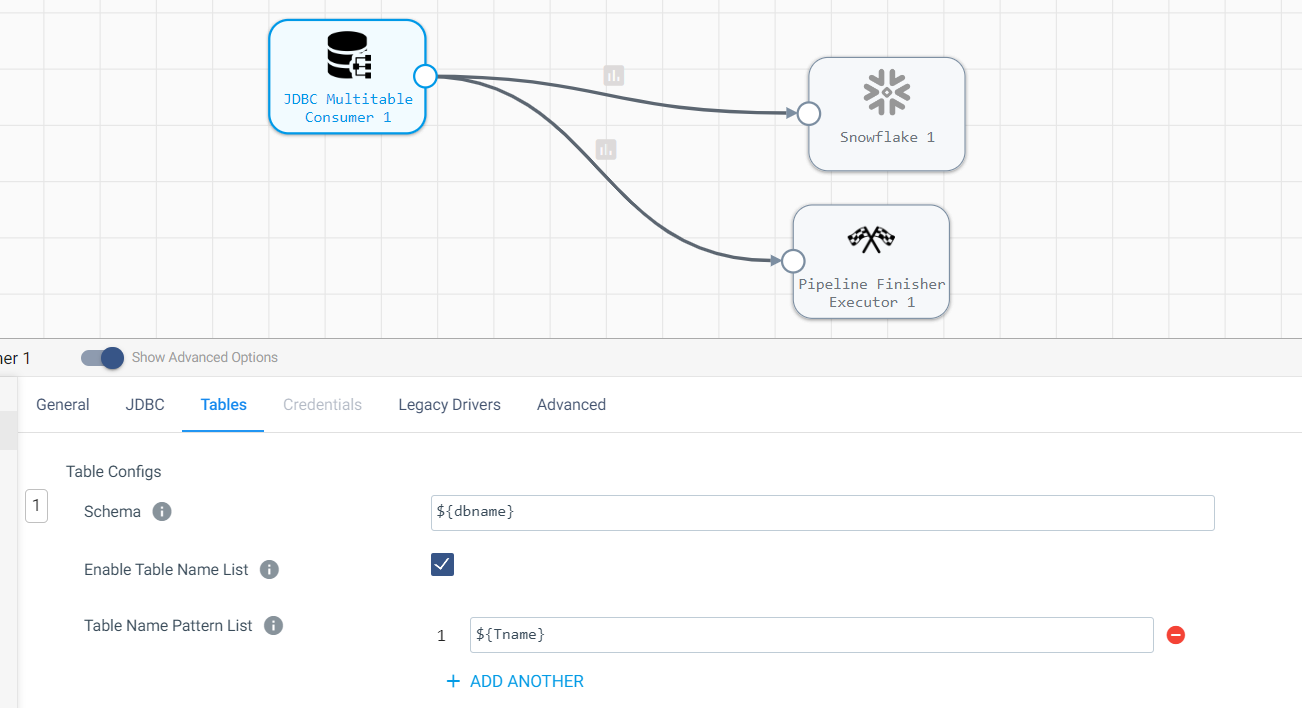

I'm currently working on implementing a metadata-driven pipeline. One of the key components of this pipeline is a job that's responsible for transferring data from Teradata to Snowflake. To make this process dynamic and reusable, I've introduced a job which will load the data to snowflake.

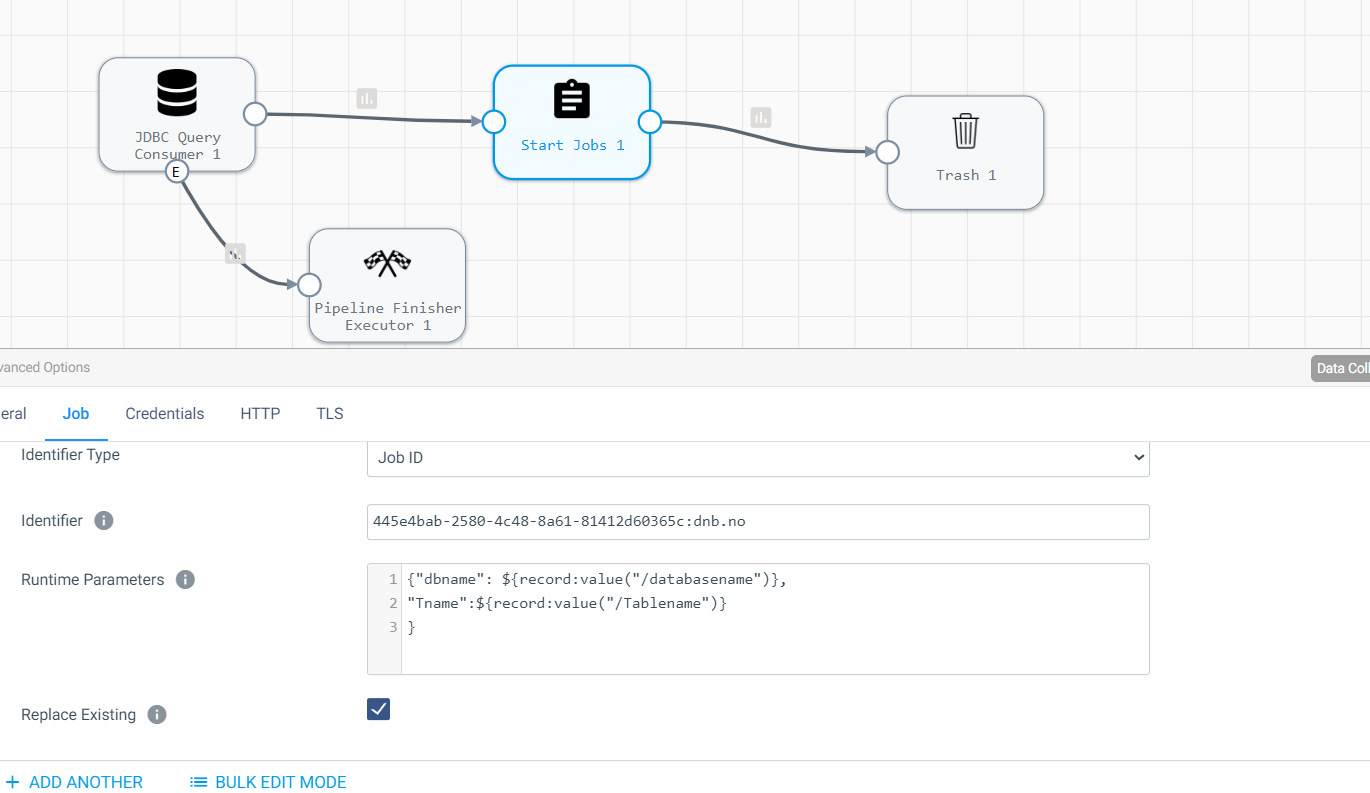

Furthermore, I've established a separate pipeline that serves the purpose of fetching metadata from Teradata. This metadata contains important information, including the values for ${dbname} and ${Tname}. The intention is to pass these parameter values to the "startjobs" process. However, I'm currently unsure about how these parameter values will be effectively utilized within the job I've created for the data transfer from Teradata to Snowflake. I would greatly appreciate any guidance or assistance in this regard.