Hi All

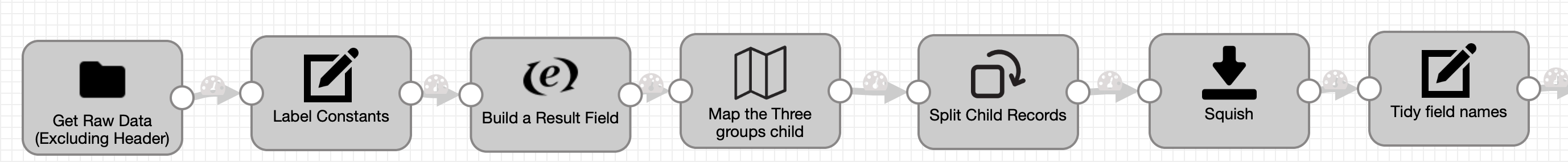

I am reading a CSV file created by a instrument , it produces a csv file with header information , then a collection of fields with values for each element tested for , I want to write each element value set to JDBC as a single row

example

machine_id, date, time , run Number, element, element_value, element_error, element, element_value,element error (50 of these sets )

i need to write to the database machine_id,data,time,run Number, element, element_value,element_ error , for each set of elements, values, errors , I can’t figure out how to loop through the record,

So from the 1 csv record I need to write 50 JDBC records , is it possible in streamsets

thanks