Hello Experts,

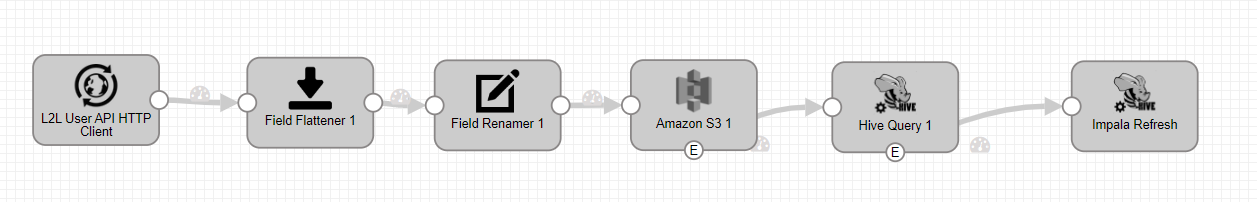

I am building SDC pipeline using http client as origin. Output of api call is json (could be nested jason).

After few stages like rename/pivote/flatten, I and writing data into S3. Next stage is hive query reading data from extrenal table reading data from S3 and inserting into final parquet table.

Source API has batch size limitations (some are allowing only 1000 records at a time and some are 2000).

SDC pipeline is working fine pulling data in a batches and inserting into final table using offsets if data is more than batch size.

For every batch S3 stage is creating a file and S3 event is getting fired and executing remaining stages of the pipeline.

Issue:

This is taking long time when we have data more than 100000 records.

Is there better way to improve perfomance of this SDC pipeline. Most of the time is taken by Hive query stage. IS there way to not execute Hive query or/and other stages after the S3 stage until all data from API call has been received in S3.

here is typical pipeline

Thanks in advance

Meghraj