Problem Statement

Very often we find duplicate records being sent by customer while exporting data from their DWH and sending to us. To ensure data quality, we need to filter out the duplicate records before they enter into our platform for processing, computation and analysis.

Solution

We have addressed this using 2 separate pipelines.

- Pipeline-1: Files arriving in S3 gets picked up by this pipeline where for every record we calculate the MD5 Hash and make an entry in REDIS with KEY as “The MD5 hash value of the record” and VALUE as “Filename-RowNumber” of the current record being processed.

- Pipeline-2: The same file processed by Pipeline-1, again gets picked by Pipeline-2 and for every record it calculates the MD5 Hash and checks it’s value in REDIS to determine which RowNumber we need to process and which one we should drop as it’s a duplicate record.

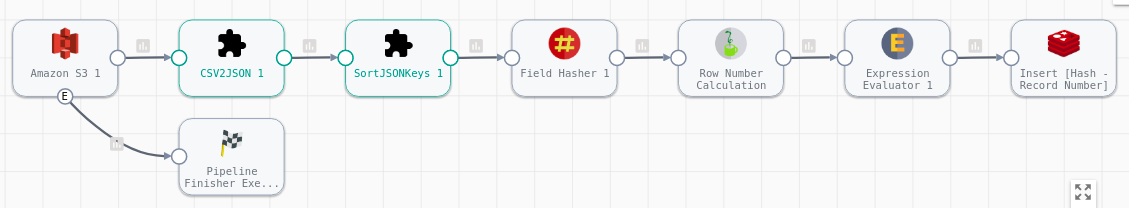

Pipeline-1:

As you may see above:

- CSV file picked from S3 origin, gets converted to JSON.

- Using Field Hasher, we calculate the MD5 Hash of the whole JSON record.

- Insert them to REDIS where KEY is MD5 Hash and Value is “Filename-RowNumber”.

- We have used Jython evaluator for calculating the row number of the current record in the given file.

- If there are duplicate rows in the incoming file, then REDIS will have the HASH of the record and the last row number of that duplicate record.

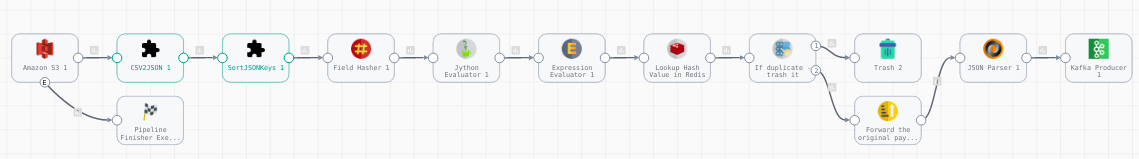

Pipeline-2:

As you may see above:

- CSV file picked from S3 origin, gets converted to JSON.

- Using Field Hasher, we calculate the MD5 Hash of the whole JSON record.

- We have used Jython evaluator for calculating the row number of the current record in the given file.

- We have used REDIS Lookup processor to get the value of the current hash.

- The stream selector will decide whether the current row number matches with the rownumber received from REDIS lookup - if same then process (publish to Kafka - this is our clean record), else drop the row as it’s a duplicate record.