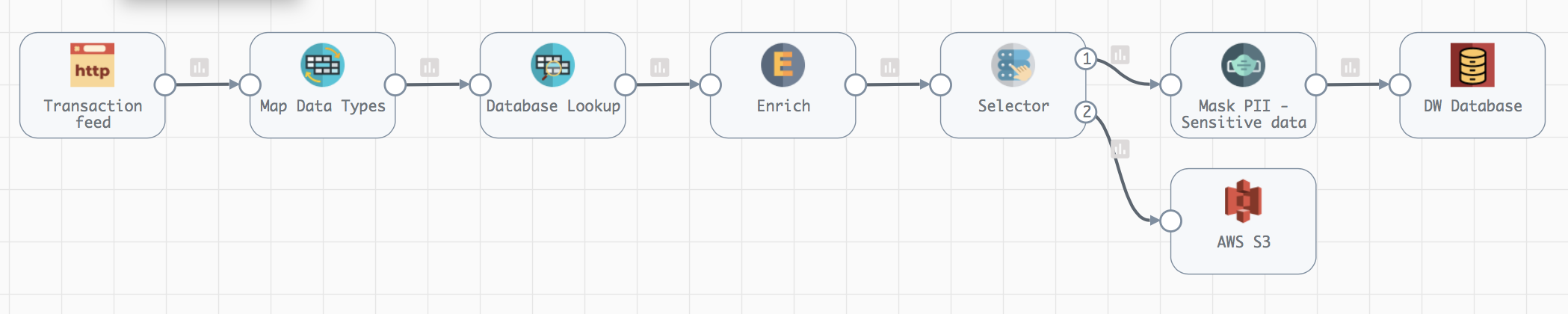

Streaming data pipeline that potentially has updates to transactions. Look up for matching records in the database (postgres), filter transaction using a condition to determine if transactions are updates vs new. For updates, mask sensitive data and writes them to postgres db table. New transaction are written to an AWS bucket and unprocessed transactions (pipeline errors) are written to a kafka destination for further analysis.