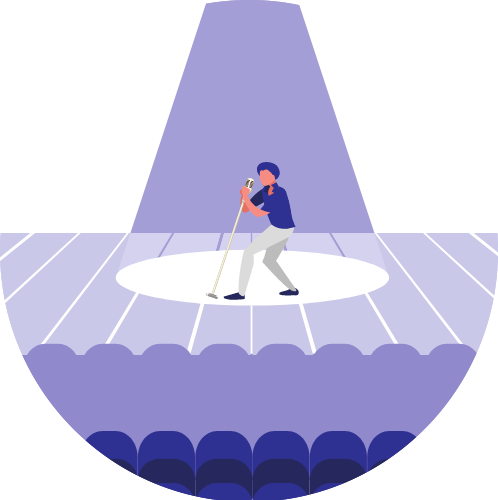

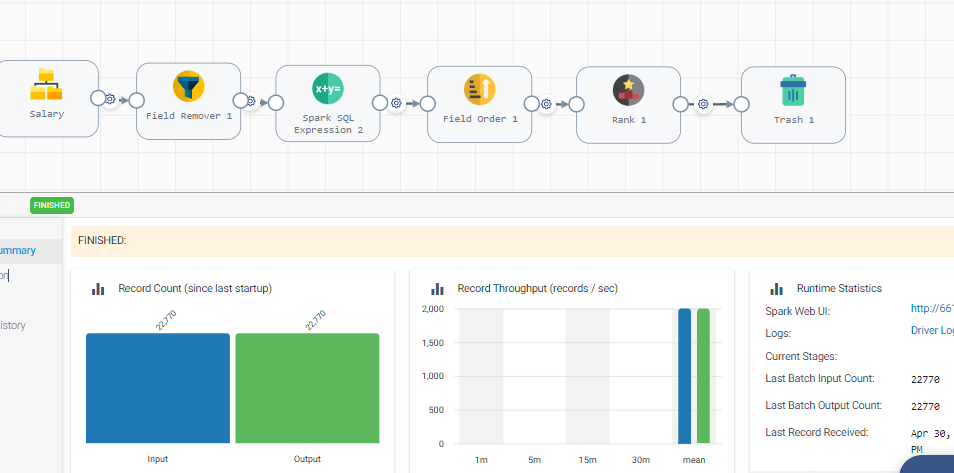

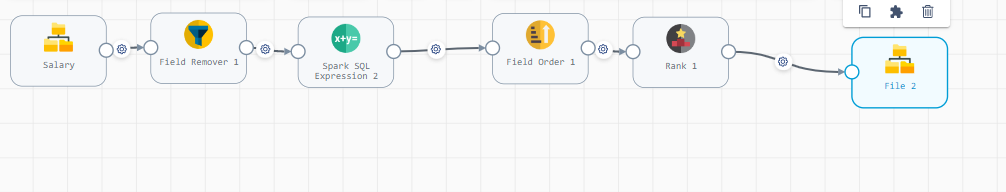

In Transformer, i sent data from file(directory) to file(local fs)

but the destination will got error

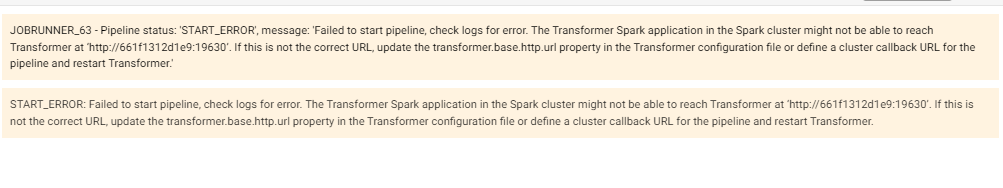

JOBRUNNER_63 - Pipeline status: 'RUN_ERROR', message: 'Operator File_3 failed due to org.apache.spark.sql.AnalysisException, check Driver Logs for further information'

RUN_ERROR: Operator File_3 failed due to org.apache.spark.sql.AnalysisException, check Driver Logs for further information (View Stack Trace... )

how to clear the error and I need help to clear the error

Thanks

Tamilarasu