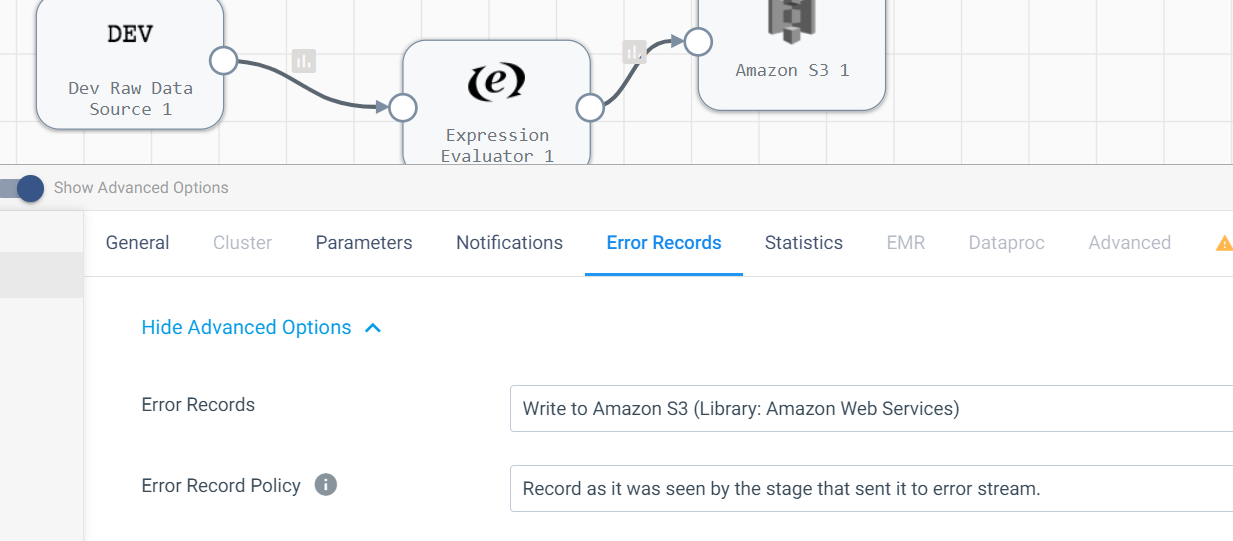

We are logging pipeline errors /orchestrated task errors in database . Now often, we see error messages like - “for actual error , open the logs “ as runtime errors.Per our solution , this gets logged into the database . We don’t want that ..Instead we want the actual errors to get logged . How can we do that ?

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.