Product: All SDC versions that support Data Format Excel

Scenario:

We have an excel sheet that is an input as Whole file to Directory / HDFS origin. We want to run some basic formatting on all the worksheets and eliminate some unwanted data before writing to the endpoint.

Goal:

Format Excel files before loading to endpoint

Data Format : Excel

i. Eliminate the first 2 rows (completely ignore) and process from the 3rd row

ii. Pick column values A,B,C and M for first row and D,E,F,I,J,K,L columns from row 2 onwards.

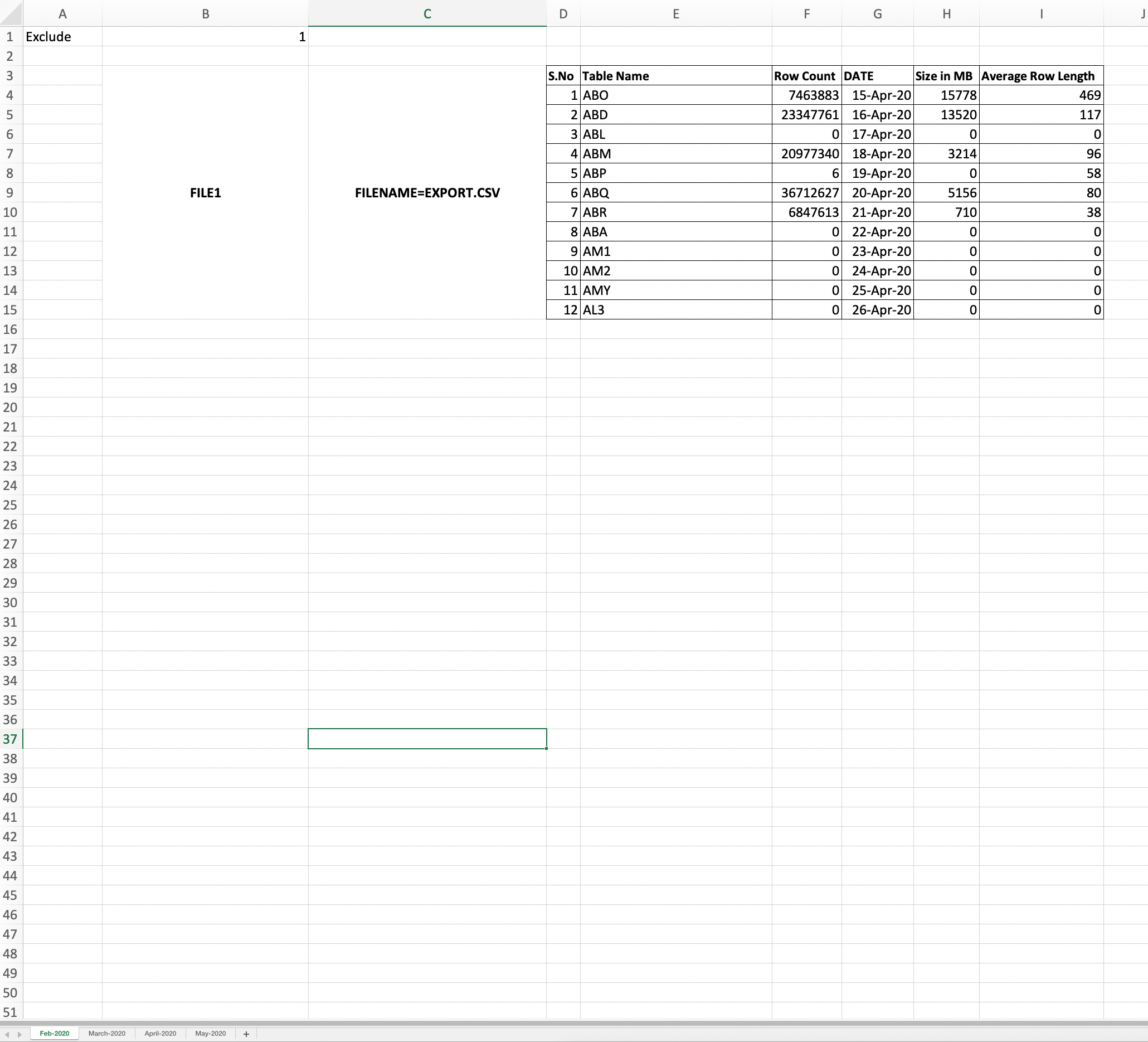

The above is a screenshot of one of the worksheets in the SampleExcel2.xlsx file attached to this article

What we want to do here is to skip rows 1,2 and columns A,B,C in all worksheets and write the remaining columns and rows to the endpoint

Solution:

Please refer to attachments to this KB which has a sample input sheet, screenshots of sample output, and 2 example pipeline JSON files to handle this.

There are 2 ways of handling this. One is using the groovy script and the other is using an EL evaluator. I have uploaded the sample pipeline for both methods.

+ We integrated a groovy script and saw it was working for a single sheet.

+ Here is a code change you need to make to your current groovy evaluator to make it work for

multiple sheets

Groovy Evaluator ->> Groovy

Init Script :

sdc.states'currentFile'] = ""

sdc.state�'worksheet'] = ""

sdc.state�'lineNum'] = 0Script :

// Sample Groovy code

records = sdc.records

for (record in records) {

try {

// initialize, so we work correctly on the first file.

// do this for the first record of the first file only.

if(sdc.state 'currentFile'].equals("")) {

sdc.state{'currentFile'] = record.attributesn'file'];

}

if(sdc.state�'worksheet'].equals("")) {

sdc.stateu'worksheet'] = record.attributess'worksheet'];

}

if(sdc.state>'currentFile'].equals(record.attributest'file'])) {

if (sdc.stateb'worksheet'].equals(record.attributes('worksheet']))

{

if(sdc.state['lineNum'] < 2) { // number of records to skip. <<<<<<<<<<<<< THIS WILL CHANGE BASED ON NUMBER OF LINES YOU WANT TO SKIP

++sdc.stateR'lineNum'];

sdc.log.info("CASE 13303: currentFile {} worksheet {} lineNum {} " ,sdc.state"'currentFile'],

sdc.state 'worksheet'], sdc.state''lineNum']);

continue; // skip this record

}

} else {

sdc.statei'worksheet'] = record.attributes�'worksheet'];

sdc.state/'lineNum'] = 1;

continue; // skip this record

}

} else {

sdc.log.info("CASE 13303: {} set to {}", sdc.statet'currentFile'], record.attributes/'file']);

sdc.state 'currentFile'] = record.attributest'file'];

sdc.state''worksheet'] = record.attributesr'worksheet'];

sdc.statee'lineNum'] = 0;

}

// Write a record to the processor output

sdc.output.write(record);

} catch (e) {

// Write a record to the error pipeline

sdc.log.error(e.toString(), e)

sdc.error.write(record, e.toString())

}

}