Pradeep wrote:

Databricks Delta Lake is the destination stage of SDC pipeline. Let us briefly try understand the flow of steps in Delta Lake stage processing.

- SDC generates a local file from the output of its previous stages.

- SDC uploads the file(eg: sdc*) from step-1 to the staging location configured.

- Databricks cluster reads the staged file and runs a COPY query to load data into the target delta lake table. If the table is not found in destination it will submit a create table query as per the configuration.

- Spark job runs and respective response is returned for the JDBC query.

Anything can go wrong in the above flow and sharing few scenarios that will help debug in such cases.

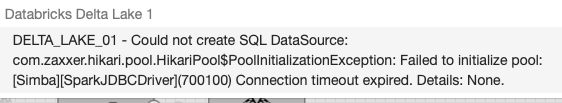

Validation error:

This error possibly mean that the supplied cluster-id in JDBC url configured is not reachable or it is currently shut down.

Solution:

- You would want to verify the configured cluster-id is correct.

- Cluster is in running state. If it is not running, SDC will trigger a start of the cluster but pipeline validation continue fail in starting state.

Could not copy staged file: This error can happen if there is an access issue for the databricks cluster to read the staged SDC file. It is the file that gets used for the LOAD query in delta lake.

:"Databricks Delta Lake 1","errorCode":"DELTA_LAKE_34","errorMessage":"DELTA_LAKE_34 - Databricks Delta Lake load request failed: 'DELTA_LAKE_32 - Could not copy staged file 'sdc-2da25ea5-a255-46c8-830c-3bda3ee2c742.csv': java.sql.SQLException: [Simba][SparkJDBCDriver](500051) ERROR processing query/statement. Error Code: 0, SQL state: Error running query: com.amazonaws.services.s3.model.AmazonS3Exception: Bad Request; request: HEAD https://streamsetscontrol.s3-us-west-2.amazonaws.com {} aws-sdk-java/1.11.595 Linux/5.4.0-1059-aws OpenJDK_64-Bit_Server_VM/25.282-b08 java/1.8.0_282 scala/2.11.12 vendor/Azul_Systems,_Inc. com.amazonaws.services.s3.model.HeadBucketRequest; Request ID: EY5RWXXZKPE87ZC7, Extended Request ID: Y67xkfOkym23XU2v/pJr8TNwYrqVV4o+tKB/rFg/1wg/1GOvBmo/lbH9OHqe7D4OSJ4JX3uqotQ=, Cloud Provider: AWS, Instance ID: i-09ccc40f7bf6802f3 (Service: Amazon S3; Status Code: 400; Error Code: 400 Bad Request; Request ID: EY5RWXXZKPE87ZC7; S3 Extended Request ID: Y67xkfOkym23XU2v/pJr8TNwYrqVV4o+tKB/rFg/1wg/1GOvBmo/lbH9OHqe7D4OSJ4JX3uqotQ=), S3 Extended Request ID: Y67xkfOkym23XU2v/pJr8TNwYrqVV4o+tKB/rFg/1wg/1GOvBmo/lbH9OHqe7D4OSJ4JX3uqotQ=,

Solution: Verify you are using S3 or ADLS as the staging location and reach out to respective people for read access to S3 or ADLS from your databricks cluster.

Note: Credentials provided in staging area of Databricks Delta Lake are for the SDC instance to write to S3 or ADLS. To load into particular table’s delta lake location databricks cluster need to have access either through instance profile or via key configured for storage account.

If the Databricks cluster is reachable, SDC is able to write to the staging location configured, and Databricks cluster is able to access the staging location next step is see if there are any jobs submitted to Spark. You would want to see Spark SQL query to view original query submitted. It will look something like below.

COPY INTO <table-name> FROM (SELECT CAST(_c0 AS string) AS intr_sk, CAST(_c1 AS string) AS eff_beg_dt, CAST(_c2 AS string) AS cele_evt_ind, CAST(_c3 AS string) AS jfw_evt_ind, CAST(_c4 AS string) AS mlt_topc_pgm_ind, CAST(_c5 AS string) AS cret_user_id, CAST(_c6 AS string) AS cret_tmsp, CAST(_c7 AS string) AS lst_updt_user_id, CAST(_c8 AS string) AS lst_updt_tmsp FROM "abfss://pradeep@bucketname.dfs.core.windows.net/sdc-3a6ca247-1cc8-494f-8bd1-1ff6684ddd0c.csv") FILEFORMAT = CSV FORMAT_OPTIONS ('header' = 'false','quote' = '"','delimiter' = ',','escape' = '\\') COPY_OPTIONS ('force' = 'true')