Product:

- StreamSets Data Collector (SDC)

- StreamSets Control Hub (SCH)

Issue:

- Is it possible to do some pre-processing on the error records before sending it to the destination Kafka topic/Local Fs?

- How to send start/stop events/error records to a different pipeline?

Solution:

Example:

- pipeline-1 generates error records.

- pipeline-2 (Error records handler) will receive the error records from pipeline-1.

Steps to send Error Records to a different pipeline:

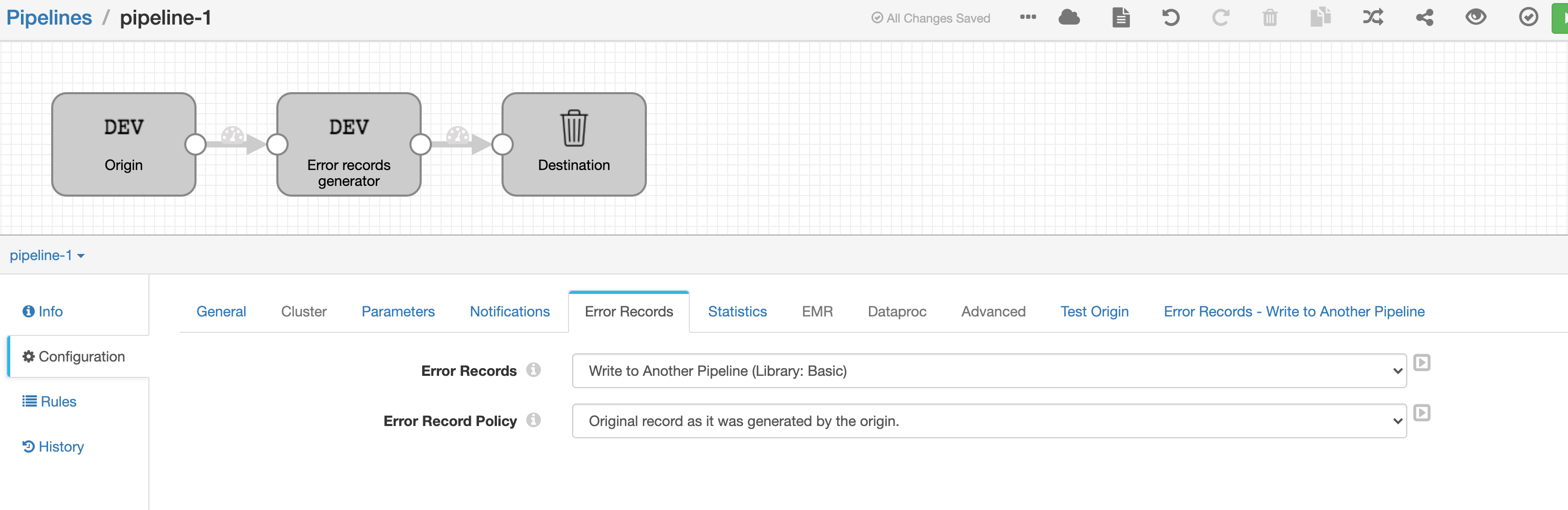

1. In pipeline-1, Click on the Error Records tab and select Write to Another Pipeline in Error Records.

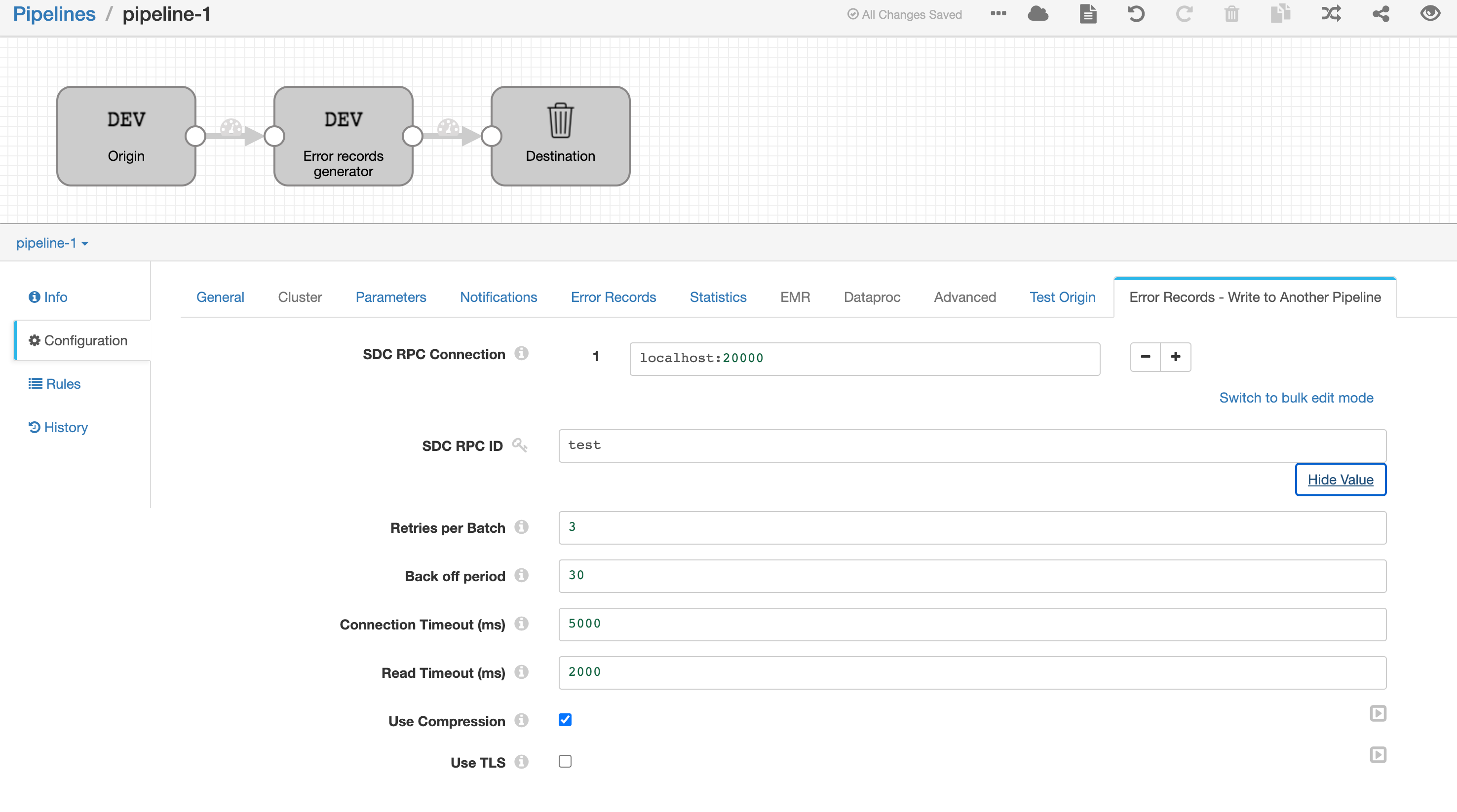

2. Click on Error Records - Write to Another Pipeline and enter below details:

SDC RPC Connection: <IP-adress>: 20000 ---> (give the IP-address of the node where pipeline-2 is running and use any port number)

SDC RPC ID: test ----> (Any name)

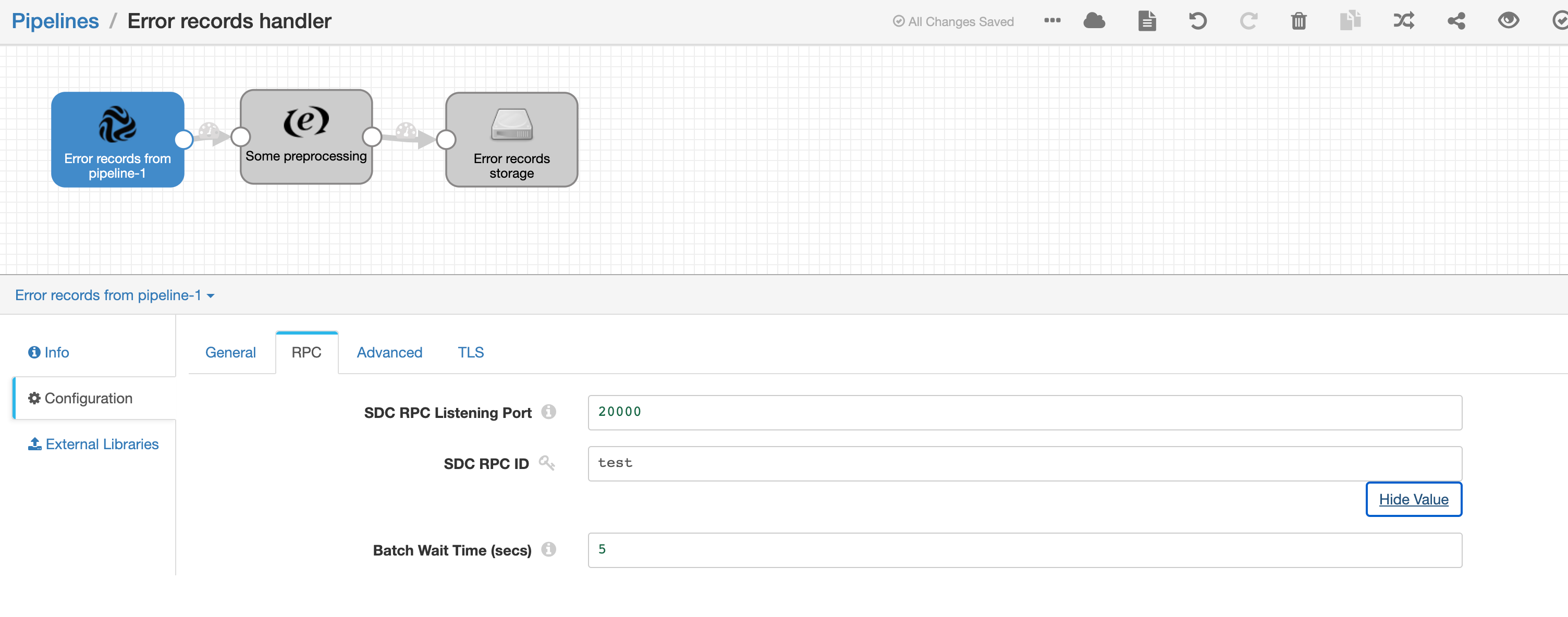

3. In pipeline-2, select the origin (SDC RPC) -> Click on RPC tab and enter below details:

SDC RPC Listening Port: 20000 ---> (use the same port number which is used in step-2)

SDC RPC ID: test ---> (add the same name that is used in step-2)

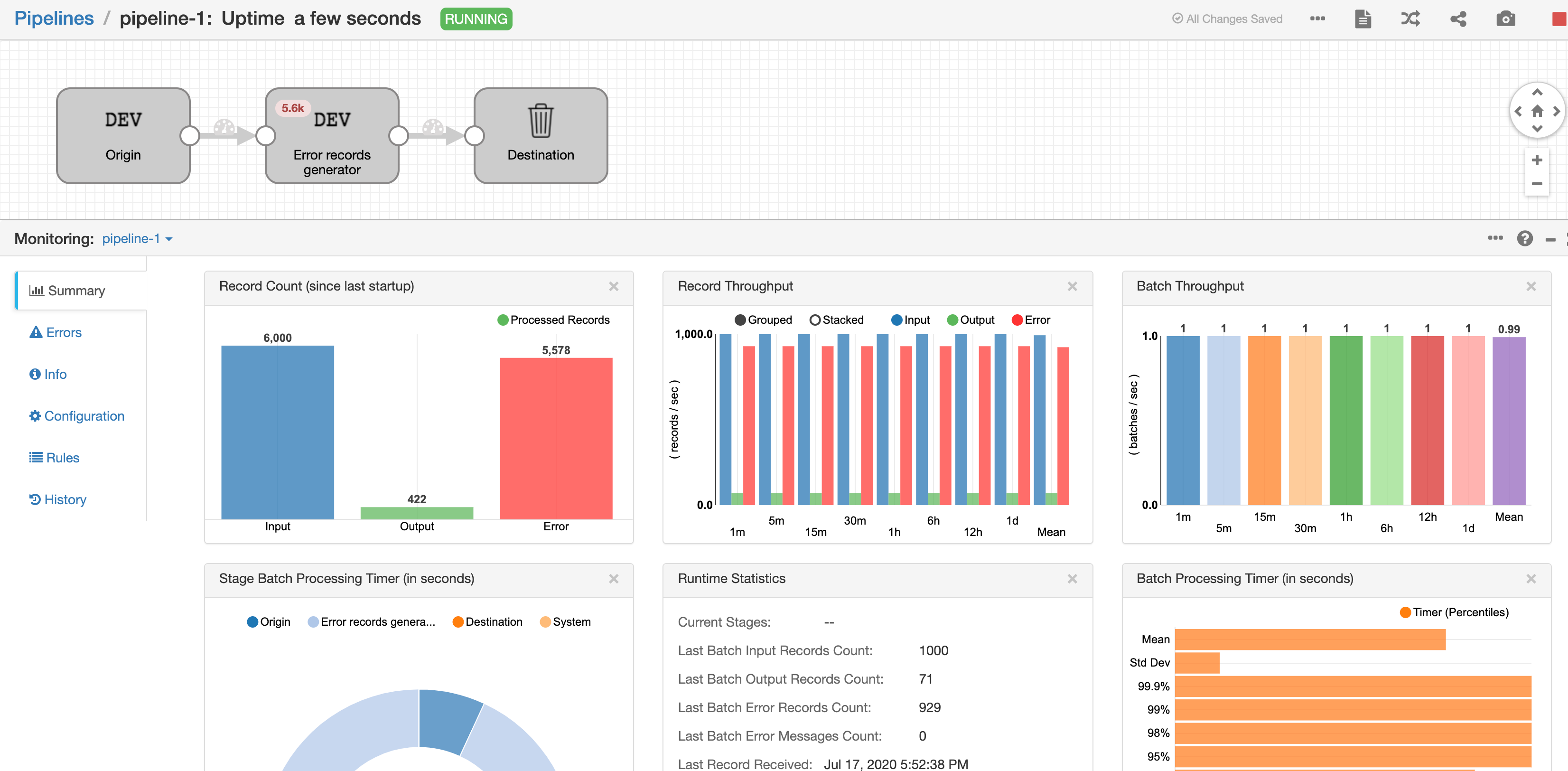

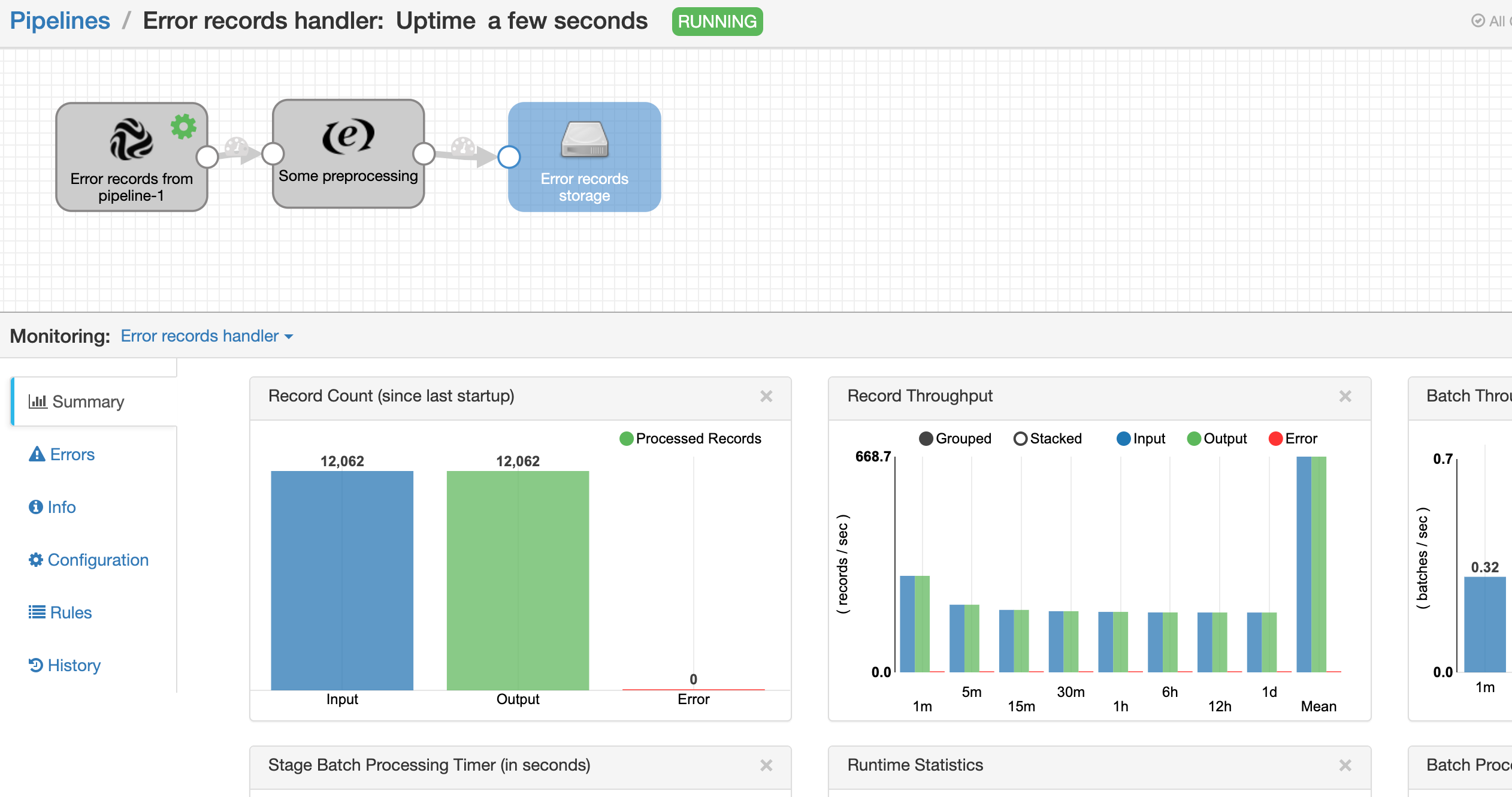

4. Now start pipeline-2 and then pipeline-1 and both pipelines looks as below way:

Pipeline 1:

Pipeline 2:

Pipelines: Both the pipelines are attached to the KB article.

Steps to send start/stop events to a different pipeline:

For start events:

1. In pipeline-1, select General Tab -> select Write to Another Pipeline in Start Event

2. Click onStart Event - Write to Another Pipeline and follow the steps which are explained for Error Records.

For stop events:

1. Follow the same steps done to start events and error records.

Common issues while running this pipeline:

Error-1:

IPC_DEST_15 - Could not connect to any SDC RPC destination: ['localhost:20000': java.net.ConnectException: Connection refused (Connection refused)]

Solution:

- You will run into this issue when you start pipeline-1 first and then pipeline-2.

- So make sure pipeline-2 is started before starting the pipeline-1.

(OR)

- Also, this happens when you use an incorrect port number.

- So make sure you use the same port number in pipeline-1 that is used in pipeline-2.

SCH:

- In case if you are running this job from SCH, make sure you run both the pipelines in the same SDC (or) Use the correct IP address in pipeline-1.

Error-2:

IPC_DEST_05 - Invalid port number in '20000': java.lang.IllegalArgumentException: Destination does not include ':' port delimiter

Solution:

- Make sure you have <IP> and <Port> in SDC RPC Connection in pipeline-1.

Error-3:

IPC_DEST_15 - Could not connect to any SDC RPC destination: ['localhost:20000': Invalid 'appId']

Solution:

- Make sure you are using the same SDC RPC ID for both pipelines.